Chapter 1 Introduction

With information growing at exponential rates, it’s no surprise that historians are referring to this period of history as the Information Age. The increasing speed at which data is being collected has created new opportunities and is certainly staged to create even more. This chapter presents the tools that have been used to solve large scale data challenges and introduces Apache Spark as a leading tool that is democratizing our ability to process data at large scale. We will then introduce the R computing language, which was specifically designed to simplify data analysis. It is then natural to ask what the outcome would be from combining the ease of use provided by R, with the compute power available through Apache Spark. This will lead us to introduce sparklyr, a project merging R and Spark into a powerful tool that is easily accessible to all.

We will then present the prerequisites, tools and steps you will need to have Spark and R working in your computer with ease. You will learn how to install Spark, initialize Spark, introduce you to common operations and help you get your very first data processing task done. It is the goal of this chapter to help anyone grasp the concepts and tools required to start tackling large scale data challenges which, until recently, were only accessible to very few organizations.

After this chapter, you will then move on into learning how to analyze large scale data, followed by building models capable of predicting trends and discover information hidden in vasts amounts of information. At which point, you will have the tools required to perform data analysis and modeling at scale. Subsequent chapters will help you move away from your local computer into computing clusters required to solve many real world problems. The last chapters will present additional topics, like real time data processing and graph analysis, which you will need to truly master the art of analyzing data at any scale. The last chapter of this book will give you tools and inspiration to consider contributing back to this project and many others.

We hope this is a journey you will enjoy, that will help you solve problems in your professional career and with our efforts combined, nudge the world into taking better decisions that can benefit us all.

1.1 Background

As humans, we have been storing, retrieving, manipulating, and communicating information since the Sumerians in Mesopotamia developed writing in about 3000 BC. Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of development: pre-mechanical (3000 BC – 1450 AD), mechanical (1450–1840), electromechanical (1840–1940), and electronic (1940–present)(Laudon, Traver, and Laudon 1996).

Mathematician George Stibitz used the word digital to describe fast electric pulses back in 1942(Ceruzzi 2012) and, to this day, we describe information stored electronically as digital information. In contrast, analog information represents everything we have stored by any non-electronic means such as hand written notes, books, newspapers, and so on(Webster 2006).

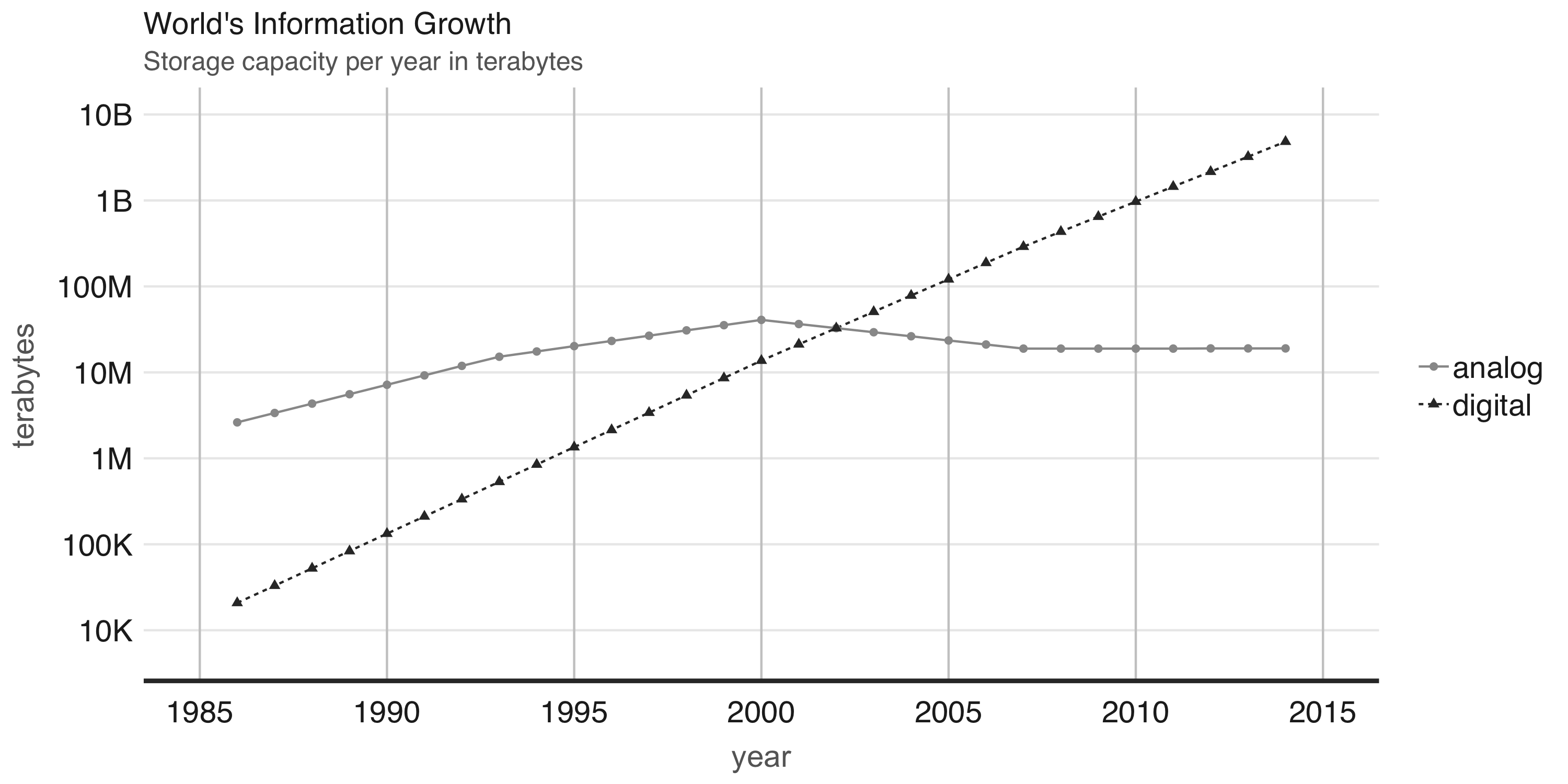

The world bank report on digital development provides an estimate of digital and analog information stored over the last decades(Group 2016). This report noted that digital information surpassed analog information around 2003. At that time, there were aboput 10 million terabytes of digital information, which is roughly about 10 million computer drives today. However, a more relevant finding from this report was that our footprint of digital information is growing at exponential rates:

FIGURE 1.1: World’s capacity to store information.

With the ambition to provide tools capable of searching all this new digital information, many companies attempted to provide such functionality with what we know today as search engines, used when searching the web. Given the vast amount of digital information, managing information at this scale was a challenging problem. Search engines were unble to store all the web page information required to support web searches in a single computer. This meant that they had to split information into several files and store them across many machines. This approach became known as the Google File System, a research paper published in 2003 by Google(Ghemawat, Gobioff, and Leung 2003).

1.1.1 Hadoop

One year later, Google published a new paper describing how to perform operations across the Google File System, this approach came to be known as MapReduce(Dean and Ghemawat 2008). As you would expect, there are two operations in MapReduce: Map and Reduce. The map operation provides an arbitrary way to transform each file into a new file, while the reduce operation combines two files. Both operations require custom computer code, but the MapReduce framework takes care of automatically executing them across many computers at once. These two operations are sufficient to process all the data available in the web, while also providing enough flexibility to extract meaningful information from it.

For example, we can use MapReduce to count words in two different text files. The mapping operation splits each word in the original file and outputs a new word-counting file with a mapping of words and counts. The reduce operation can be defined to take two word-counting files and combine them by aggregating the totals for each word, this last file will contain a list of word counts across all the original files.

FIGURE 1.2: Simple MapReduce Example.

Counting words is often the most basic MapReduce example, but it can be also used for much more sophisticated and interesting applications. For instance, MapReduce can be used to rank web pages in Google’s PageRank algorithm, which assigns ranks to web pages based on the count of hyperlinks linking to a web page and the rank of the page linking to it.

After these papers were released by Google, a team in Yahoo worked on implementing the Google File System and MapReduce as a single open source project. This project was released in 2006 as Hadoop with the Google File System implemented as the Hadoop File System, or HDFS for short. The Hadoop project made distributed file-based computing accessible to a wider range of users and organizations which enabled them to make use of MapReduce beyond web data processing.

While Hadoop provided support to perform MapReduce operations over a distributed file system, it still required MapReduce operations to be written with code every time a data analysis was run. To improve over this tedious process, the Hive project released in 2008 by Facebook, brought Structured Query Language (SQL) support to Hadoop. This meant that data analysis could now be performed at large-scale without the need to write code for each MapReduce operation; instead, one could write generic data analysis statements in SQL that are much easier to understand and write.

1.1.2 Spark

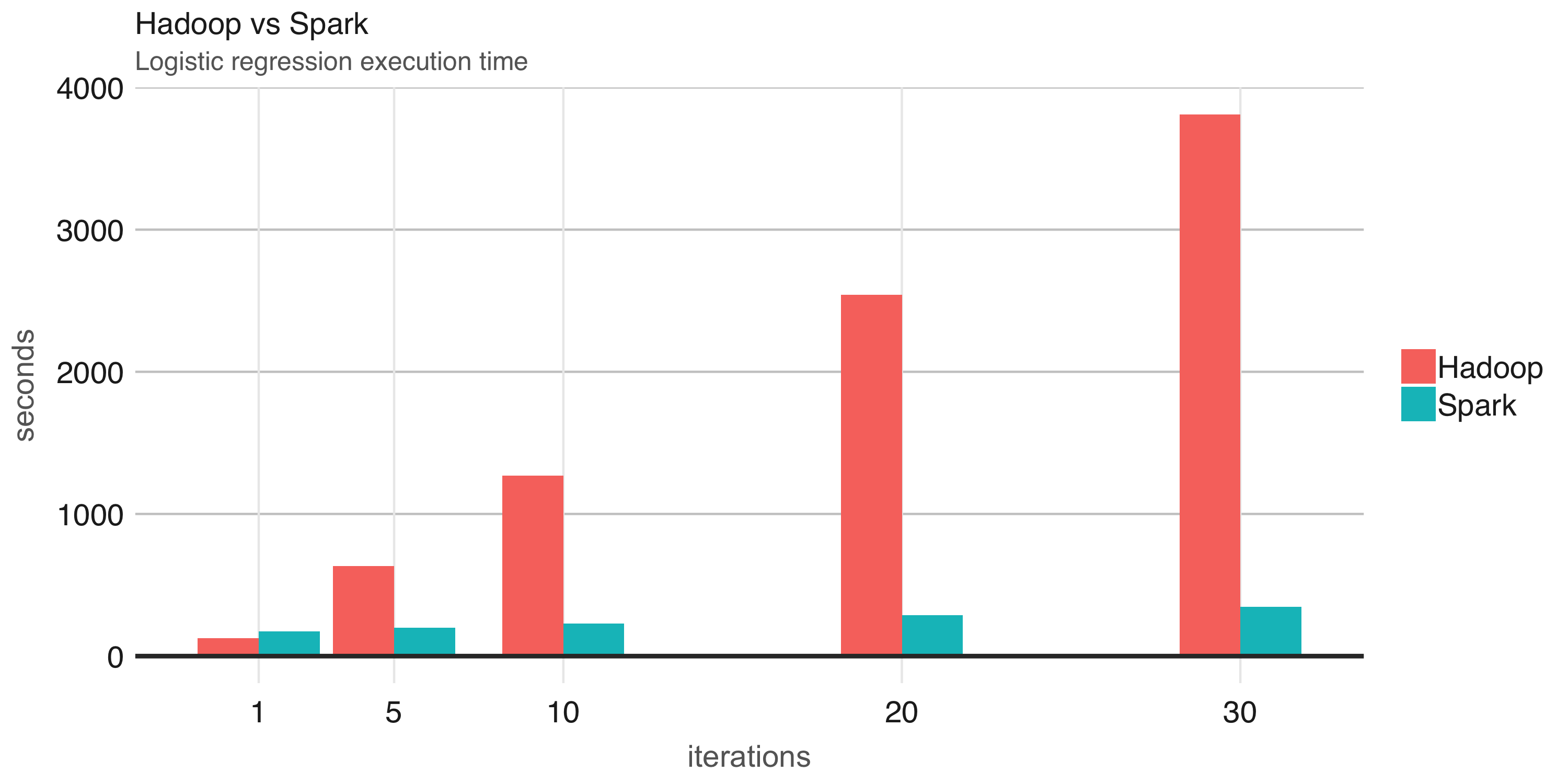

In 2009, Apache Spark began as a research project at the UC Berkeley’s AMPLab to improve on MapReduce. Specifically, by providing a richer set of verbs beyond MapReduce that facilitate optimizing code running in multiple machines, and by loading data in-memory making operations much fasters than Hadoop’s on-disk storage. One of the earliest results showed that running logistic regression, a data modeling technique that will be introduced under the modeling chapter, allowed Spark to run 10 times faster than Hadoop by making use of in-memory datasets(Zaharia et al. 2010).

FIGURE 1.3: Logistic regression performance in Hadoop and Spark.

While Spark is well known for its in-memory performance, Spark was designed to be a general execution engine that works both in-memory and on-disk. For instance, Spark holds records in large-scale sorting, where data was not loaded in-memory; but rather, Spark made use of improvements in network serialization, network shuffling and efficient use of the CPU’s cache to dramatically improve performance. For comparison, one can sort 100 terabytes of data in 72min and 2100 computers using Hadoop, but only 206 computers in 23 minutes using Spark, it’s also the case that Spark holds the record in the cloud sorting benchmark, which makes Spark the most cost effective solution for large-scale sorting.

| Hadoop Record | Spark Record | |

|---|---|---|

| Data Size | 102.5 TB | 100 TB |

| Elapsed Time | 72 mins | 23 mins |

| Nodes | 2100 | 206 |

| Cores | 50400 | 6592 |

| Disk | 3150 GB/s | 618 GB/s |

| Network | 10Gbps | 10Gbps |

| Sort rate | 1.42 TB/min | 4.27 TB/min |

| Sort rate / node | 0.67 GB/min | 20.7 GB/min |

In 2010, Spark was released as an open source project and then donated to the Apache Software Foundation in 2013. Spark is licensed under the Apache 2.0, which allows you to freely use, modify, and distribute it. In 2015, Spark reaches more than 1000 contributors, making it one of the most active projects in the Apache Software Foundation.

This gives an overview of how Spark came to be, which we can now use to formally introduce Apache Spark as follows:

“Apache Spark is a fast and general engine for large-scale data processing.”

To help us understand this definition of Apache Spark, we will break it down as follows:

- Data Processing: Data processing is the collection and manipulation of items of data to produce meaningful information(French 1996).

- General: Spark optimizes and executes parallel generic code, as in, there are no restrictions as to what type of code one can write in Spark.

- Large-Scale: One can interpret this as cluster-scale, as in, a set of connected computers working together to accomplish specific goals.

- Fast: Spark is much faster than its predecessor by making efficient use of memory, network and CPUs to speed data processing algorithms in computing cluster.

Since Spark is general, you can use Spark to solve many problems, from calculating averages to approximating the value of Pi, predicting customer churn, aligning protein sequences or analyzing high energy physics at CERN.

Describing Spark as large scale implies that a good use case for Spark is tackling problems that can be solved with multiple machines. For instance, when data does not fit in a single disk driver or does not fit into memory, Spark is a good candidate to consider.

Since Spark is fast, it is worth considering for problems that may not be large-scale, but where using multiple processors could speed up computation. For instance, sorting large datasets or CPU intensive models could also bennefit from running in Spark.

Therefore, Spark is good at tackling large-scale data processing problems, this usually known as big data (data sets that are more voluminous and complex that traditional ones), but also is good at tackling large-scale computation problems, known as big compute (tools and approaches using a large amount of CPU and memory resources in a coordinated way).

Big data and big compute problems are usually easy to spot – if the data does not fit into a single machine, you might have a big data problem; if the data fits into a single machine but a process over the data takes days, weeks or even months to compute, you might have a big compute problem.

However, there is also a third problem space where neither data nor compute are necessarily large-scale and yet, there are significant benefits to using Spark. For this third problem space, there are a few use cases this breaks to:

Velocity: Suppose you have a dataset of 10 gigabytes in size and a process that takes 30 minutes to run over this data – this is by no means big-compute nor big data. However, if you happen to be researching ways to improve the accuracy of your models, reducing the runtime down to 3 minutes is a significant improvement, which can lead to significant advances and productivity gains by increasing the velocity at which you can analyze data. Alternatevely, you might need to process data faster, for stock trading for instance, while 3 minutes could seem as fast enough; it can be way too slow for realtime data processing, where you might need to process data in a few seconds – or even down to a few milliseconds.

Variety: You could also have an efficient process to collect data from many sources into a single location, usually a database, this process could be already running efficiently and close to realtime. Such processes are known at ETL (Extract-Transform-Load); data is extracted from multiple sources, transformed to the required format and loaded in a single data store. While this has worked for years, the tradeoff from this approach is that adding a new data source is expensive. Since the system is centralized and tightly controlled, making changes could cause the entire process to halt; therefore, adding new data source usually takes too long to be implemented. Instead, one can store all data its natural format and process it as needed using cluster computing, this architecture is currently known as a data lake. In addition, storing data in its raw format allows you to process a variety of new file formats like images, audio and video; without having to figure out how to fit them into conventional structured storage systems.

Veracity: Asserts that data can vary greatly in quality which might require special analysis methods to improve its accuracy. For instance, suppose you have a table of cities with values like San Francisco, Seattle and Boston, what happens when data contains a misspelled entry like “Bston”? In a relational database, this invalid entry might get dropped; however, dropping values is not necessarily the best approach in all cases, you might want to correct this field by making use of geocodes, cross referencing data sources or attempting a best-effort match. Therefore, understanding the veracity of the original data source and what accuracy your particular analysis needs, can get yield a better outcome in many cases.

If we include “Volume” as a synonym for big data, you get the mnemonics people refer as the four ’V’s of big data; others have gone as far as expending this to five or even as the 10 Vs of Big Data. Mnemonics aside, cluster computing is being used today in more innovative ways and and is not uncommon to see organizations experimenting with new workflows and a variety of tasks that were traditionally uncommon for cluster computing. Much of the hype attributed to big data falls into this space where, strictly speaking, one is not handling big data but there are still beneffits from using tools designed for big data and big compute. Our hope is that this book will help you understand the opportunities and limitations of cluster computing, and specifically, the opportunities and limitations from using Apache Spark with R.

1.1.3 R

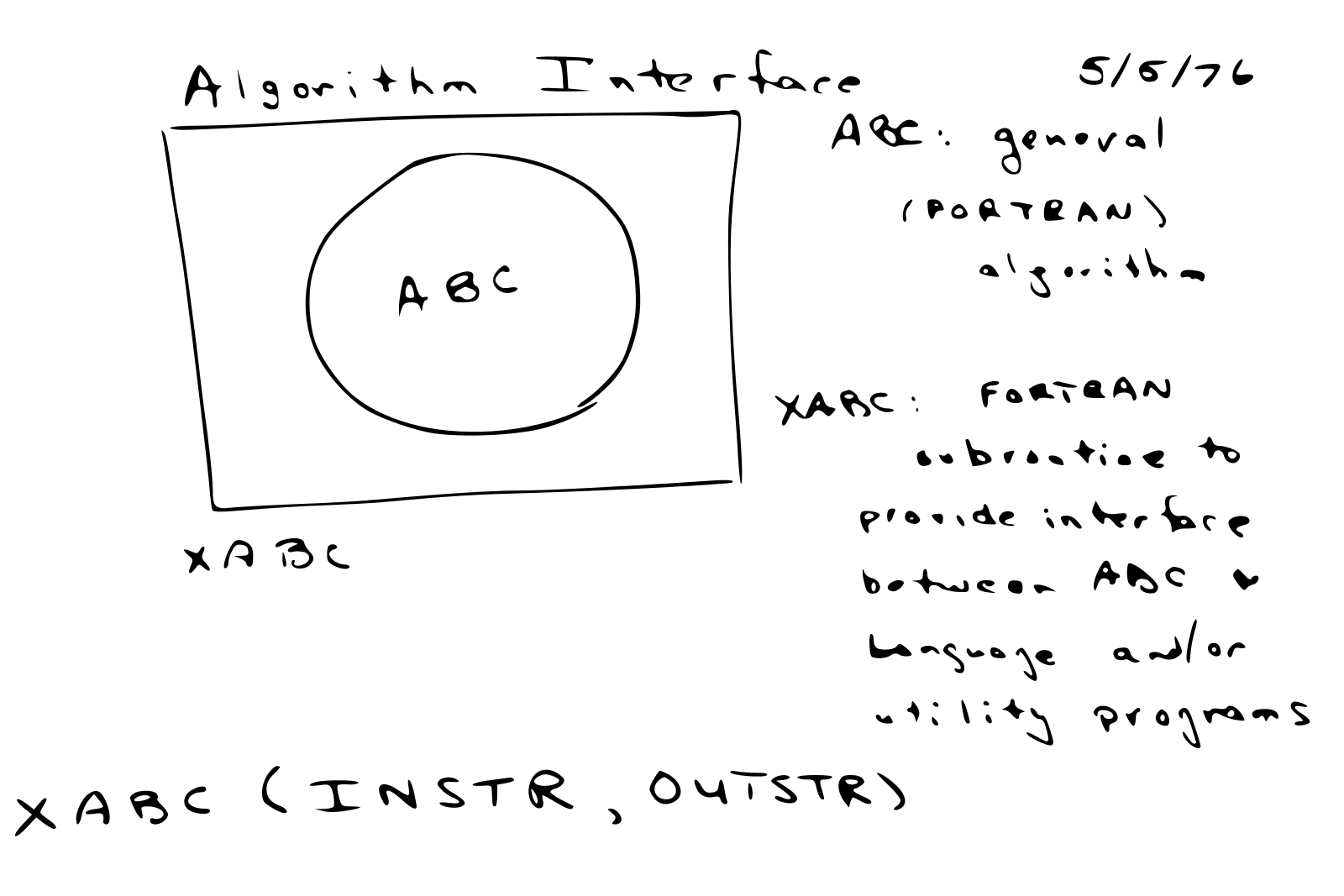

The R computing language has its origins in the S language, created at Bell Laboratories. R was not created at Bell Labs, but its predecesor, the S computing language was. Rick Becker explained in useR 2016 that at that time in Bell Labs, computing was done by calling subroutines written in the Fortran language which, apparently, were not pleasant to deal with. The S computing language was designed as an interface language to solve particular problems without having to worry about other languages, such as Fortran. The creator of S, John Chambers, describes how S was designed to provide an interface that simplifies data processing through the following diagram:

FIGURE 1.4: Interface language diagram by John Chambers - Rick Becker useR 2016.

R is a modern and free implementation of S, specifically:

R is a programming language and free software environment for statistical computing and graphics.

While working with data, I believe there are two strong arguments for using R:

- The R Language was designed by statisticians for statisticians, meaning, this is one of the few successful languages designed for non-programmers; so learning R will probably feel more natural. Additionally, since the R language was designed to be an interface to other tools and languages, R allows you to focus more on modeling and less on peculiarities of computer science and engineering.

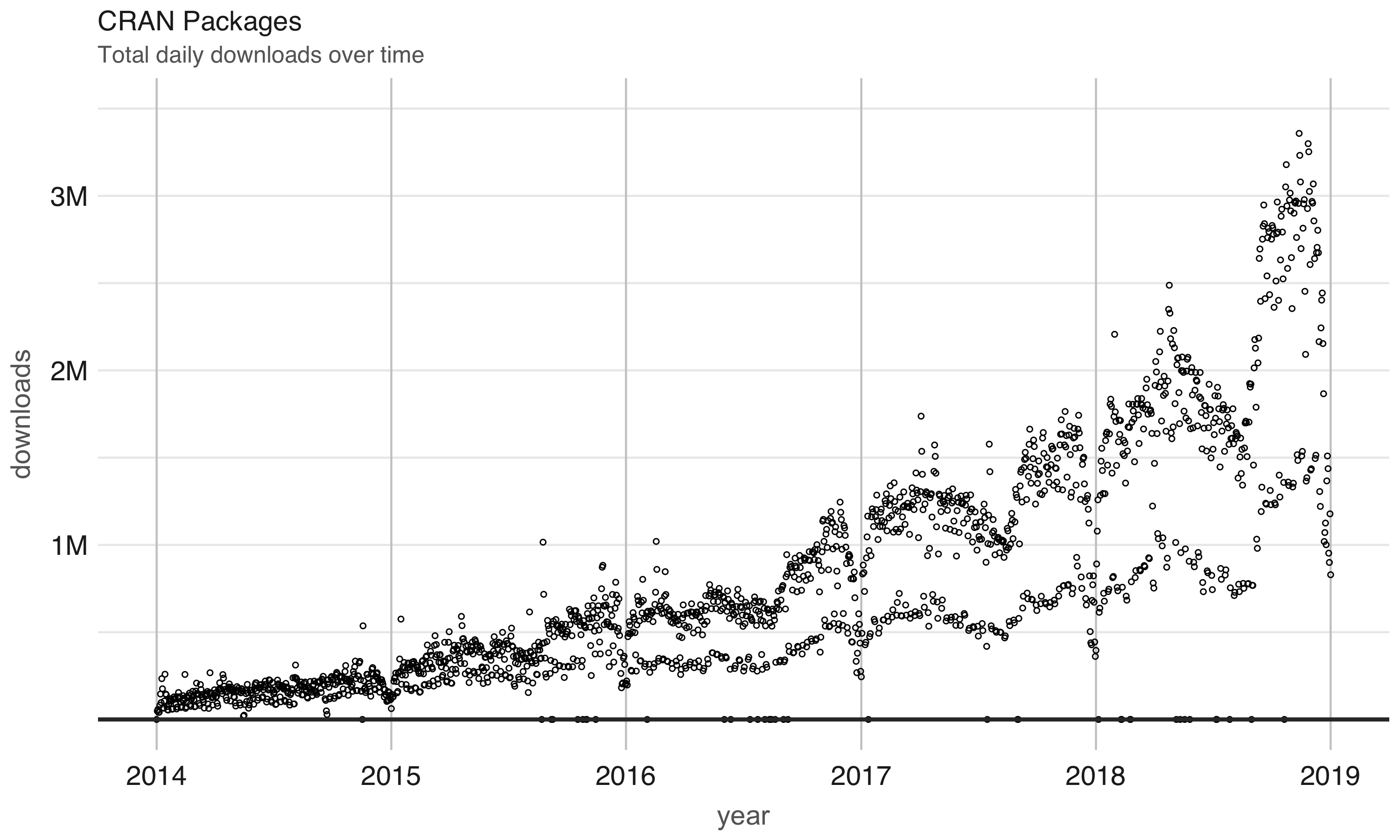

- The R Community provides a rich package archive provided by CRAN (The Comprehensive R Archive Network) which allows you to install ready-to-use packages to perform many tasks; most notably, high-quality data manipulation, visualizations and statistic models, many of which are only available in R. In addition, the R community is a welcoming and active group of talented individuals motivated to help you succeed. Many packages provided by the R community make R, by far, the best option for statistical computing. Some of the most downloaded R packages include: dplyr to manipulate data, cluster to analyze clusters and ggplot2 to visualize data. We can quantify the growth of the R community by plotting daily downloads of R packages in CRAN.

FIGURE 1.5: Daily downloads of CRAN packages.

Aside from statistics, R is also used in many other fields. The following ones are particularily relevant to this book:

- Data Science: Data science is based on knowledge and practices from statistics and computer science that turns raw data into understanding(Wickham and Grolemund 2016) by using data analysis and modeling techniques. Statistical methods provide a solid foundation to understand the world and perform predictions, while the automation provided by computing methods allows us to simplify statistical analysis and make it much more accessible. Some have advocated that statistics should be renamed data science(Wu 1997); however, data science goes beyond statistics by also incorporating advances in computing(Cleveland 2001). This book presents analysis and modeling techniques common in statistics, but applied to large datasets which requires incorporating advances in distributed computing.

- Machine Learning: Machine learning uses practices from statistics and computer science; however, it is heavily focused on automation and prediction. For instance, the term “machine learning” was coined by Arthur Samuel while automating a computer program to play checkers(Samuel 1959). While we could perform data science on particular games, we rather need to automate the entire process. Therefore, this falls in the realm of machine learning, not data science. Machine learning makes it possible for many users to take advantage of statistical methods without being aware of the statistical methods that are being used. One of the first important applications of machine learning was to filter spam emails; in this case, it’s just not feasible to perform data analysis and modeling over each email account; therefore, machine learning automates the entire process of finding spam and filtering it out without having to involve users at all. This book will present the methods to transition data science workflows into fully-automated machine learning methods through, for instance, providing support to build and export Spark pipelines that can be easily reused in automated environments.

- Deep Learning: Deep learning builds on knowledge of statistics, data science and machine learning to define models vaguely inspired on biological nervous systems. Deep learning models evolved from neural network models after the vanishing-gradient-problem was resolved by training one layer at a time(Hinton, Osindero, and Teh 2006) and have proven useful in image and speech recognition tasks. For instance, when using voice assistants like Siri, Alexa, Cortana or Google, the model performing the audio to text conversion is most likely to be based on deep learning models. While GPUs (Graphic Processing Units) have been successfully used to train deep learning models(Krizhevsky, Sutskever, and Hinton 2012); some datasets can not be processed in a single GPU. It is also the case that deep learning models require huge amounts of data, which needs to be preprocessed across many machines before they can be fed into a single GPU for training. This book won’t make any direct references to deep learning models; however, the methods presented in this book can be used to prepare data for deep learning and, in the years to come, using deep learning with large scale computing will become a common practice. In fact, recent versions of Spark have already introduced execution models optimized for training deep learning in Spark.

While working in any of the previous fields, you will be faced with increasingly large datasets or increasingly complex computations that are slow to execute or at times, even impossible to process in a single computer. However, it is important to understand that Spark does not need to be the answer to all our computations problems; instead, when faced with computing challenges in R, the following techniques can be as effective:

- Sampling: A first approach to try is reduce the amount of data being handled, through sampling. However, data must be sampled properly by applying sound statistical principles. For instance, selecting the top results is not sufficient in sorted datasets; with simple random sampling, there might be underrepresented groups, which we could overcome with stratified sampling, which in turn adds complexity to properly select categories. It is out of the scope of this book to teach how to properly perform statistical sampling, but many online resources and literature is available on this subject.

- Profiling: One can try to understand why a computation is slow and make the necessary improvements. A profiler, is a tool capable of inspecting code execution to help identify bottlenecks. In R, the R profiler, the

profvisR package(“Profvis” 2018) and RStudio profiler feature(“RStudio Profiler” 2018), allow you to easily to retrieve and visualize a profile; however, it’s not always trivial to optimize. - Scaling Up: Speeding up computation is usually possible by buying faster or more capable hardware, say, increasing your machine memory, hard drive or procuring a machine with many more CPUs, this approach is known as “scaling up”. However, there are usually hard limits as to how much a single computer can scale up and even with significant CPUs, one needs to find frameworks that parallelize computation efficiently.

- Scaling Out: Finally, we can consider spreading computation and storage across multiple machines; this approach provides the highest degree of scalability since one can potentially use an arbitrary number of machines to perform a computation, this approach is commonly known as “scaling out”. However, spreading computation effectively across many machines is a complex endeavour, specially without using specialized tools and frameworks like Apache Spark.

This last point brings us closer to the purpose of this book, which is to bring the power of distributed computing systems provided by Apache Spark, to solve meaningful computation problems in Data Science and related fields, using R.

1.1.4 sparklyr

When you think of the computation power that Spark provides and the ease of use of the R language, it is natural to want them to work together through – seamlessly. This is also what the R community expected, an R package that would provide an interface to Spark that was, easy to use, compatible with other R packages and, available in CRAN; with this goal, we started developing sparklyr. The first version, sparklyr 0.4, was released during the useR! 2016 conference, this first version included support for dplyr, DBI, modeling with MLlib and an extensible API that enabled extensions like H2O’s rsparkling package. Since then, many new features and improvements have been made available through sparklyr 0.5, 0.6, 0.7, 0.8 and 0.9.

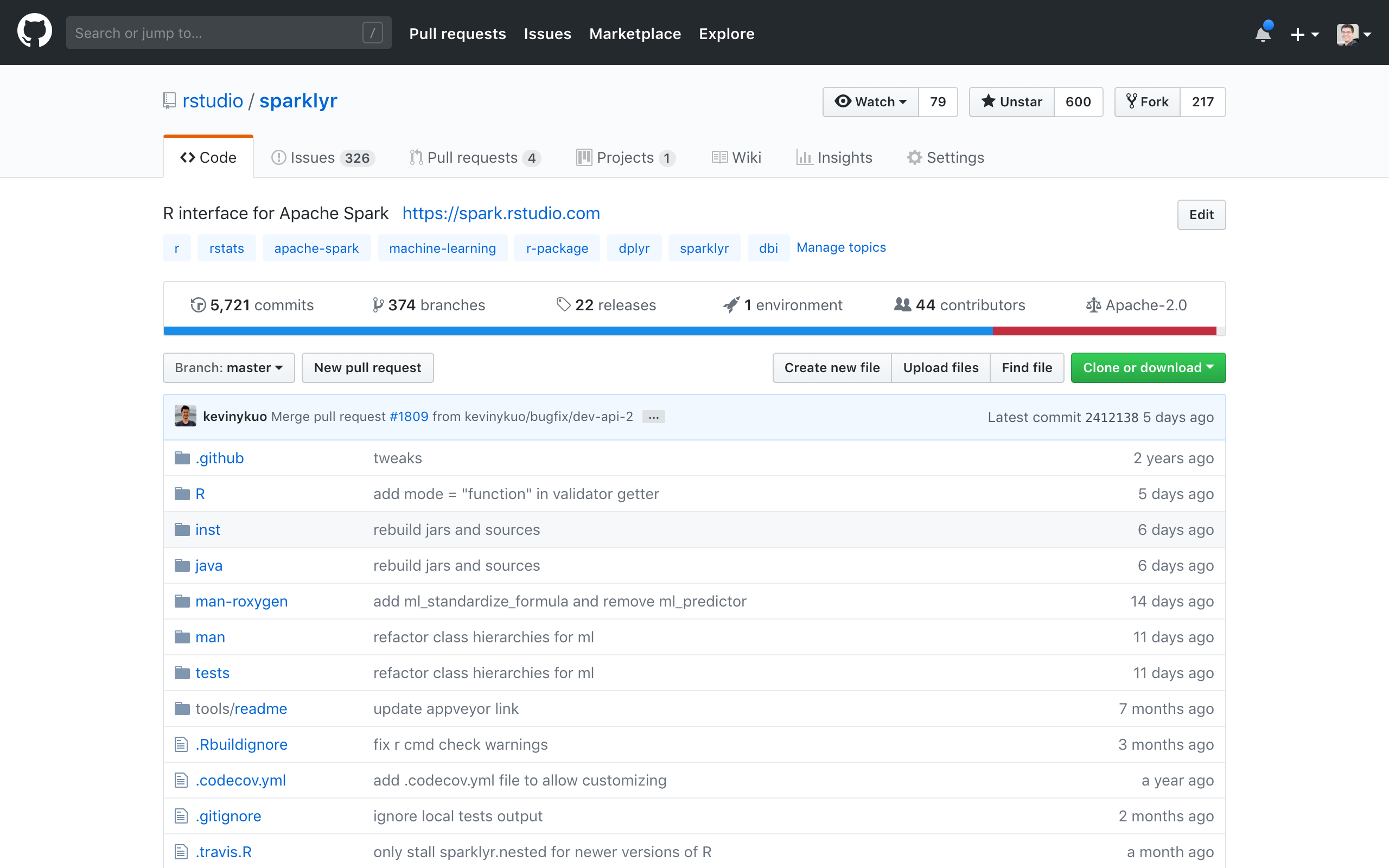

Officially, sparklyr is an R interface for Apache Spark. It’s available in CRAN and works like any other CRAN package, meaning that: it’s agnostic to Spark versions, it’s easy to install, it serves the R community, it embraces other packages and practices from the R community and so on. It’s hosted in GitHub under github.com/rstudio/sparklyr and licensed under Apache 2.0 which is allows you to clone, modify and contribute back to this project.

While thinking of who and why should use sparklyr, the following roles come to mind:

- New Users: For new users,

sparklyrprovides the easiest way to get started with Spark. Our hope is that the early chapters of this book will get you up running with ease and set you up for long term success. - Data Scientists: For data scientists that already use and love R,

sparklyrintegrates with many other R practices and packages likedplyr,magrittr,broom,DBI,tibbleand many others that will make you feel at home while working with Spark. For those new to R and Spark, the combination of high-level workflows available insparklyrand low-level extensibility mechanisms make it a productive environment to match the needs and skills of every data scientist. - Expert Users: For those users that are already immersed in Spark and can write code natively in Scala, consider making your libraries available as an

sparklyrcustom extension to the R community, a diverse and skilled community that can put your contributions to good use while moving open science forward.

This book is titled “The R in Spark” as a way to describe and teach that area of overlap between Spark and R. sparklyr is the R package that materializes this overlap of communities, expectations, future directions, packages, and package extensions as well. Naming this book sparklyr or “Introduction to sparklyr” would have left behind a much more exciting opportunity – an opportunity to present this book as an intersection of the R and Spark communities. Both are solving very similar problems with a set of different skills and backgrounds; therefore, it is my hope that sparklyr can be a fertile ground for innovation, a welcoming place to newcomers, a productive place for experienced data scientists and an open community where cluster computing and modeling can come together.

1.2 Getting Started

From R, getting started with Spark using sparklyr and a local cluster is as easy as running:

To make sure we can all run the code above and understand it, this section will walk you through the prerequisites, installing sparklyr and Spark, connecting to a local Spark cluster and briefly explaining how to use Spark.

However, if a Spark cluster and R environment have been made available to you, you do not need to install the prerequisites nor install Spark yourself. Instead, you should ask for the Spark master parameter and connect as follows; this parameter will be formally introduced under the clusters and connections chapters.

1.2.1 Prerequisites

R can run in many platforms and environments; therfore, whether you use Windows, Mac or Linux, the first step is to install R from the r-project.org, detailed instructions are provided in the Installing R appendix.

Most people use programming languages with tools to make them more productive; for R, RStudio would be such tool. Strictly speaking, RStudio is an Integrated Development Environment (or IDE), which also happens to support many platforms and environments. We strongly recommend you get RStudio installed if you haven’t done so already, see details under the Installing RStudio appendix.

Additionally, since Spark is built in the Scala programming language which is run by the Java Virtual Machine, you also need to install Java 8 in your system. It is likely that your system already has Java installed, but you should still check the version and update if needed as described in the Installing Java appendix.

1.2.2 Installing sparklyr

As many other R packages, sparkylr is available to be installed from CRAN and can be easily installed as follows:

The CRAN release of sparklyr contains the most stable version and it’s the recommended version to use; however, to try out features being developed in sparklyr, you can install directly from GitHub using the devtools package. First, install the devtools package and then install sparklyr as follows:

The sparklyr GitHub repository contains all the latest features,issues and project updates; it’s the place where sparklyr is actevely developed and a resource that is helpful while troubleshooting issues.

FIGURE 1.6: GitHub Repository for sparklyr.

1.2.3 Installing Spark

Start by loading sparklyr,

This will makes all sparklyr functions available in R, which is really helpful; otherwise, we would have to run each sparklyr command prefixed with sparklyr::.

As mentioned, Spark can be easily installed by running spark_install(); this will install the latest version of Spark locally in your computer, go ahead and run spark_install(). Notice that this command requires internet connectivity to download Spark.

All the versions of Spark that are available for installation can be displayed with spark_available_versions():

## spark

## 1 1.6.3

## 2 1.6.2

## 3 1.6.1

## 4 1.6.0

## 5 2.0.0

## 6 2.0.1

## 7 2.0.2

## 8 2.1.0

## 9 2.1.1

## 10 2.2.0

## 11 2.2.1

## 12 2.3.0

## 13 2.3.1

## 14 2.3.2

## 15 2.4.0A specific version can be installed using the Spark version and, optionally, by also specifying the Hadoop version. For instance, to install Spark 1.6.3, we would run:

You can also check which versions are installed by running:

spark hadoop dir

7 2.3.1 2.7 /spark/spark-2.3.1-bin-hadoop2.7The path where Spark is installed is referenced as Spark’s home, which is defined in R code and system configuration settings with the SPARK_HOME identifier. When using a local Spark cluster installed with sparklyr, this path is already known and no additional configuration needs to take place.

Finally, in order to uninstall an specific version of Spark you can run spark_uninstall() by specifying the Spark and Hadoop versions, for instance:

1.2.4 Connecting to Spark

It’s important to mention that, so far, we’ve only installed a local Spark cluster. A local cluster is really helpful to get started, test code and troubleshoot with ease. Further chapters will explain where to find, install and connect to real Spark clusters with many machines, but for the first few chapters, we will focus on using local clusters.

To connect to this local cluster we simply run:

The master parameter identifies which is the “main” machine from the Spark cluster; this machine is often called the driver node. While working with real clusters using many machines, most machines will be worker machines and one will be the master. Since we only have a local cluster with only one machine, we will default to use "local" for now.

1.2.5 Using Spark

Now that you are connected, we can run a few simple commands. For instance, let’s start by loading some data into Apache Spark.

To accomplish this, lets first create a text file by running:

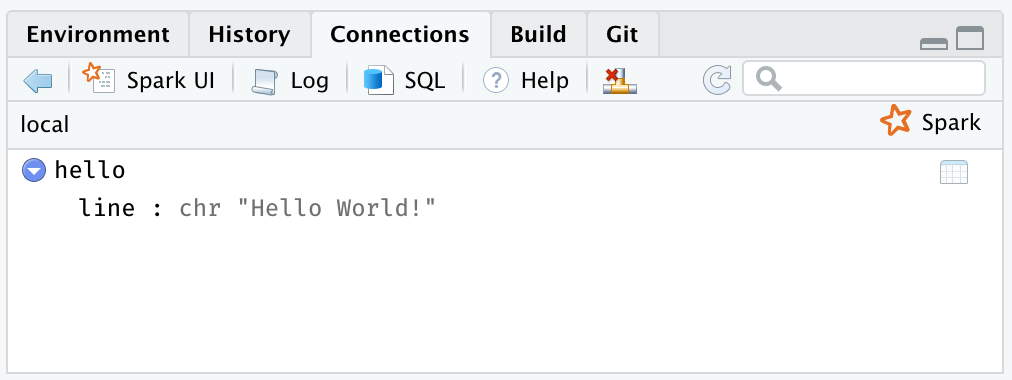

We can now read this text file back from Spark by running:

## # Source: spark<hello> [?? x 1]

## line

## * <chr>

## 1 Hello World!Congrats! You have successfully connected and loaded your first dataset into Spark.

We’ll explain what’s going on in spark_read_text(). The first parameter, sc, gives the function a reference to the active Spark Connection that was earlier created with spark_connect(). The second parameter names this dataset in Spark. The third parameter specifies a path to the file to load into Spark. Now, spark_read_text() returns a reference to the dataset in Spark which R automatically prints. Whenever a Spark dataset is printed, Spark will collect some of the records and display them for you. In this particular case, that dataset contains just one row for the line: Hello World!.

We will now use this simple example to present various useful tools in Spark we should get familiar with.

1.2.5.1 Web Interface

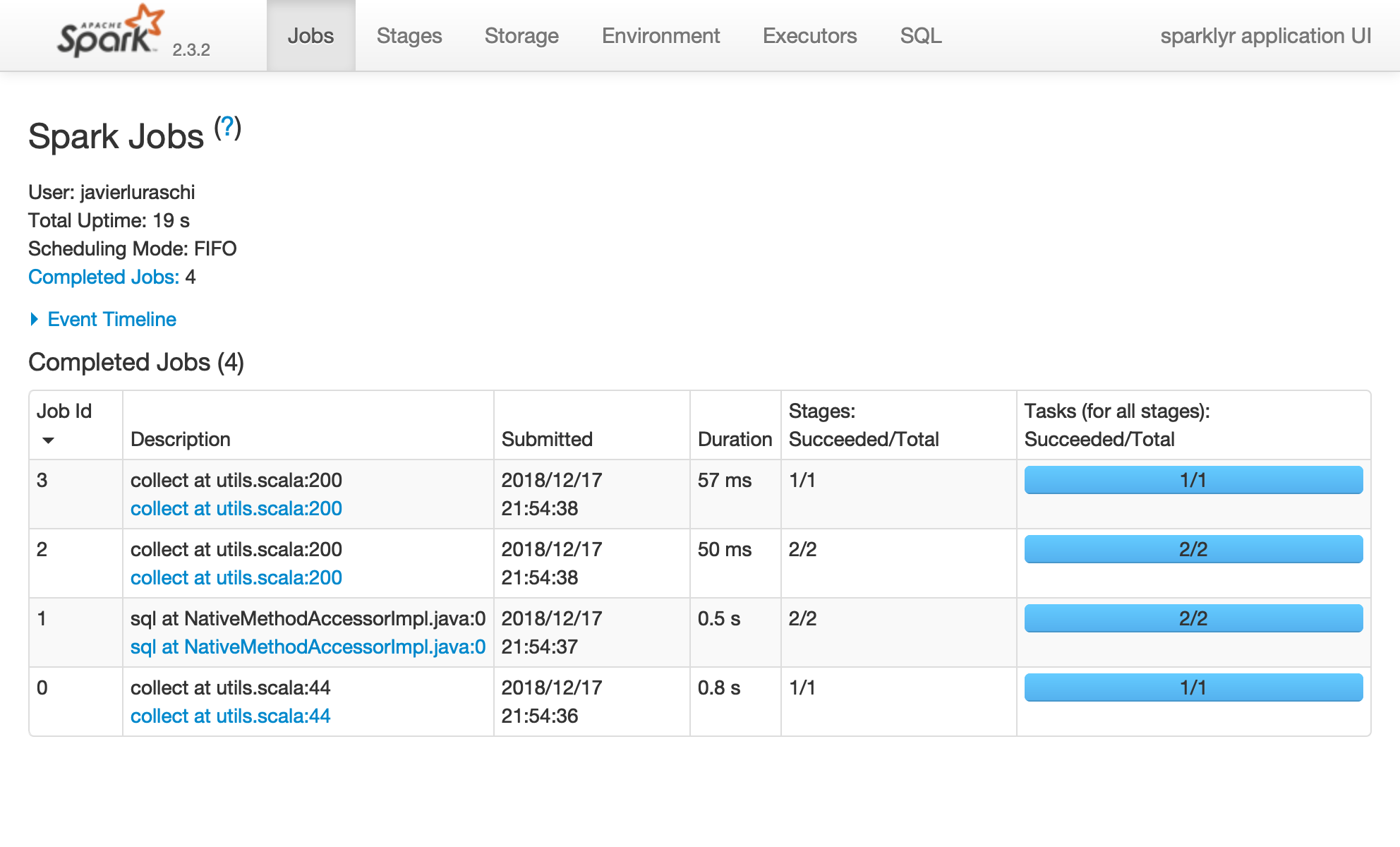

Most of the Spark commands are executed from the R console; however, monitoring and analyzing execution is done through Spark’s web interface. This interface is a web page provided by Spark which can be accessed by running:

FIGURE 1.7: Apache Spark Web Interface.

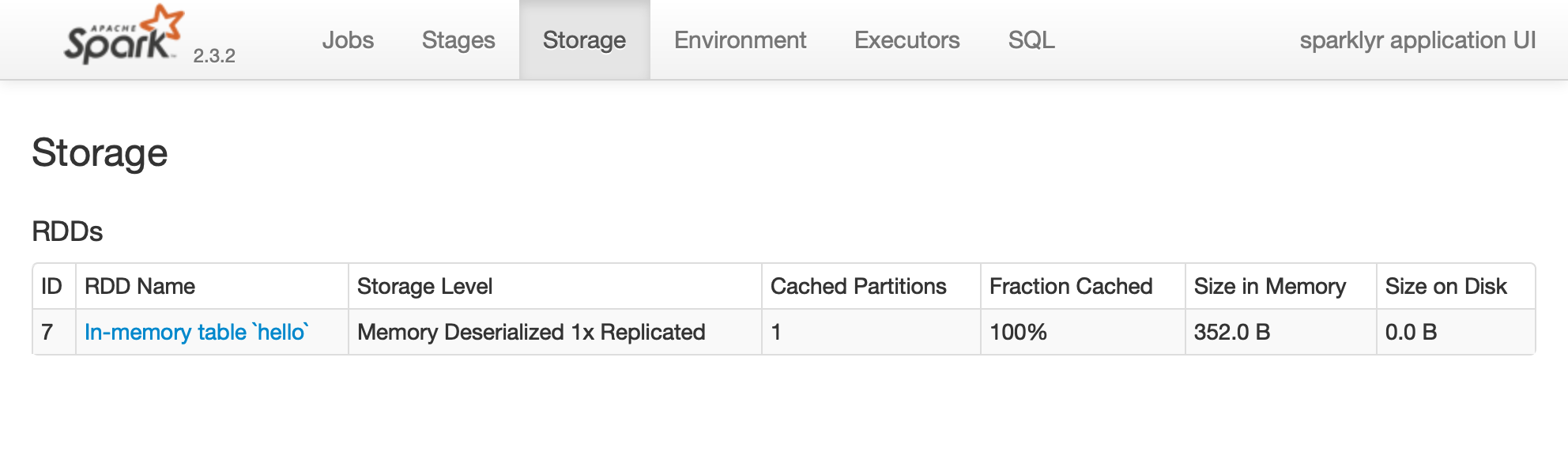

As we mentioned, printing the “hello” dataset collected a few records to be displayed in the R console. You can see in the Spark web interface that a job was started to collect this information back from Spark. You can also select the storage tab to see the “hello” dataset cached in-memory in Spark:

FIGURE 1.8: Apache Spark Web Interface - Storage Tab.

The caching section in the tunning chapter will cover this in detail, but as a start, it’s worth noticing that this dataset is fully loaded into memory since the fraction cached is 100%, is useful also to note the size in memory column, which tracks the total memory being used by this dataset.

1.2.5.2 Logs

Another common tool in Spark that you should familiarize with are the Spark logs. A log is just a text file where Spark will append information relevant to the execution of tasks in the cluster. For local clusters, we can retrieve all the logs by running:

18/10/09 19:41:46 INFO Executor: Finished task 0.0 in stage 5.0 (TID 5)...

18/10/09 19:41:46 INFO TaskSetManager: Finished task 0.0 in stage 5.0...

18/10/09 19:41:46 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose...

18/10/09 19:41:46 INFO DAGScheduler: ResultStage 5 (collect at utils...

18/10/09 19:41:46 INFO DAGScheduler: Job 3 finished: collect at utils...Or we can retrieve specific log entries containing, say sparklyr, by using the filter parameter as follows:

## 18/10/09 18:53:23 INFO SparkContext: Submitted application: sparklyr

## 18/10/09 18:53:23 INFO SparkContext: Added JAR...

## 18/10/09 18:53:27 INFO Executor: Fetching spark://localhost:52930/...

## 18/10/09 18:53:27 INFO Utils: Fetching spark://localhost:52930/...

## 18/10/09 18:53:27 INFO Executor: Adding file:/private/var/folders/...You won’t need to read logs or filter them while using Spark, except in cases where you need to troubleshoot a failed computation; in those cases, logs are an invaluable resource to have at hand and therefore; worth introducing early on.

1.2.6 Disconnecting

For local clusters (really, any cluster) once you are done processing data you should disconnect by running:

This will terminate the connection to the cluster as well as the cluster tasks . If multiple Spark connections are active, or if the conneciton instance sc is no longer available, you can also disconnect all your Spark connections by running:

Notice that exiting R, RStudio or restarting your R session will also cause the Spark connection to terminate, which in turn terminates the Spark cluster and cached data that is not explicitly persisted.

1.2.7 Using RStudio

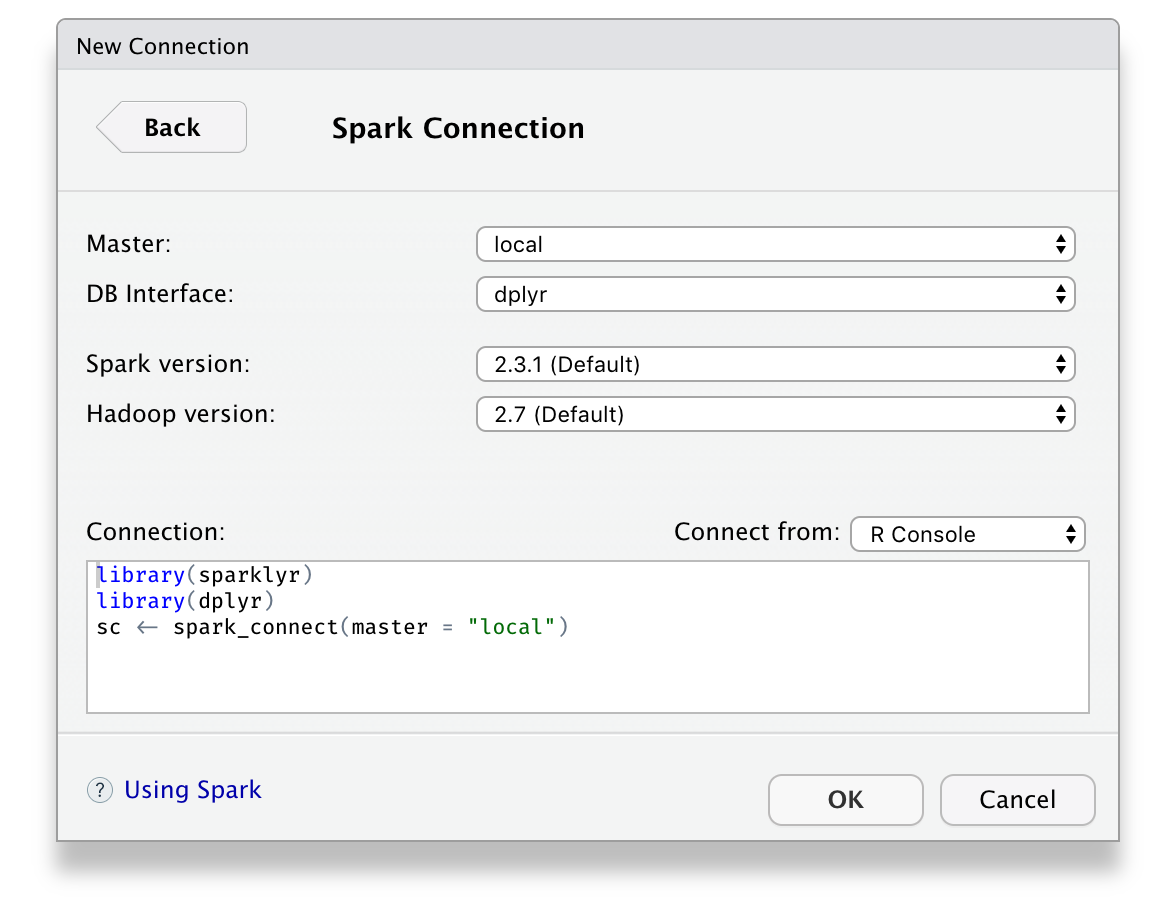

Since it’s very common to use RStudio with R, sparklyr provides RStudio extensions to help simplify your workflows and increase your productivity while using Spark in RStudio. If you are not familiar with RStudio, take a quick look at the Using RStudio appendix section. Otherwise, there are a couple extensions worth highlighting.

First, instead of starging a new connections using spark_connect() from RStudio’s R console, you can use the new connection action from the connections pane and then, select the Spark connection. You can then customize the versions and connect to Spark which will simply generate the right spark_connect() command and execute this in the R console for you.

FIGURE 1.9: RStudio New Spark Connection.

Second, once connected to Spark, either by using the R console or through RStudio’s connections pane, RStudio will display your datasets available in the connections pane. This is a useful way to track your existing datasets and provides an easy way to explore each of them.

FIGURE 1.10: RStudio Connections Pane.

Additionally, an active connection provides the following custom actions:

- Spark UI: Opens the Spark web interface, a shortcut to

spark_ui(sc). - Log: Opens the Spark web logs, a shortcut to

spark_log(sc). - SQL: Opens a new SQL query, see

DBIand SQL support in the data analysis chapter. - Help: Opens the reference documentation in a new web browser window.

- Disconnect: Disconnects from Spark, a shortcut to

spark_disconnect(sc).

The rest of this book will use plain R code, it is up to you to execute this code in the R console, RStudio, Jupyter Notebooks or any other tool that support executing R code since, the code provided in this book executes in any R environment.

1.2.8 Resources

While we’ve put significant effort into simplifying the onboarding process, there are many additional resources that can help you troubleshoot particular issues while getting started and, in general, introduce you to the broader Spark and R communities to help you get specific answers, discuss topics and get connected with many users actevely using Spark with R.

- Documentation: This should be your first stop to learn more about Spark when using R. The documentation is kept up to date with examples, reference functions and many more relevant resources, spark.rstudio.com.

- Blog: To keep up to date with major

sparklyrannouncements, you can follow the RStudio blog, blog.rstudio.com/tags/sparklyr. - Community: For general

sparklyrquestions, you can post then in the RStudio Community tagged assparklyr, community.rstudio.com/tags/sparklyr. - Stack Overflow: For general Spark questions, Stack Overflow is a great resource, stackoverflow.com/questions/tagged/apache-spark; there are also many topics specifically about

sparklyr, stackoverflow.com/questions/tagged/sparklyr. - Github: If you believe something needs to be fixed, open a GitHub issue or send us a pull request, github.com/rstudio/sparklyr.

- Gitter: For urgent issues, or to keep in touch, you can chat with us in Gitter, gitter.im/rstudio/sparklyr.

1.3 Recap

This chapter presented Spark as a modern and powerful computing platform, R as an easy-to-use computing language with solid foundations in statistical methods and, sparklyr as a project bridging both technologies and communities together. You learned about the prerequisites required to work with Spark, how to connect to Spark using spark_connect(), install a local cluster using spark_install(), load a simple dataset, launch the web interface and display logs using spark_web(sc) and spark_log(sc) respectively, disconnect from RStudio using spark_disconnect() and we closed this chapter presenting the RStudio extensions sparklyr provides.

It is my hope that this chapter will help anyone interested in learning cluster computing using Spark and R getting started, ready to experiment on your own and ready to tackle actual data analysis and modeling problems which, the next two chapters will introduce you. The next chapter, analysis, will present data analysis as the process to inspect, clean, and transform data with the goal of discovering useful information. Modeling can be considered part of data analysis; however, it deserves it’s own chapter to truly understand and take advantage of the modeling functionality available in Spark. In a world where the total amount of information is growing exponentailly, learning how to perform data analysis and modeling at scale, will help you tackle the problems and opportunities humanity is facing today.

References

Laudon, Kenneth C, Carol Guercio Traver, and Jane P Laudon. 1996. “Information Technology and Systems.” Cambridge, MA: Course Technology.

Ceruzzi, Paul E. 2012. Computing: A Concise History. MIT Press.

Webster, Merriam. 2006. “Merriam-Webster Online Dictionary.” Webster, Merriam.

Group, World Bank. 2016. The Data Revolution. World Bank Publications.

Ghemawat, Sanjay, Howard Gobioff, and Shun-Tak Leung. 2003. “The Google File System.” In Proceedings of the Nineteenth Acm Symposium on Operating Systems Principles. New York, NY, USA: ACM.

Dean, Jeffrey, and Sanjay Ghemawat. 2008. “MapReduce: Simplified Data Processing on Large Clusters.” Commun. ACM 51 (1). New York, NY, USA: ACM: 107–13.

Zaharia, Matei, Mosharaf Chowdhury, Michael J Franklin, Scott Shenker, and Ion Stoica. 2010. “Spark: Cluster Computing with Working Sets.” HotCloud 10 (10-10): 95.

French, Carl. 1996. Data Processing and Information Technology. Cengage Learning Business Press.

Wickham, Hadley, and Garrett Grolemund. 2016. R for Data Science: Import, Tidy, Transform, Visualize, and Model Data. O’Reilly Media, Inc.

Wu, C.F. Jeff. 1997. “Statistics = Data Science?”

Cleveland, William S. 2001. “Data Science: An Action Plan for Expanding the Technical Areas of the Field of Statistics?”

Samuel, Arthur L. 1959. “Some Studies in Machine Learning Using the Game of Checkers.” IBM Journal of Research and Development 3 (3). IBM: 210–29.

Hinton, Geoffrey E, Simon Osindero, and Yee-Whye Teh. 2006. “A Fast Learning Algorithm for Deep Belief Nets.” Neural Computation 18 (7). MIT Press: 1527–54.

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E Hinton. 2012. “Imagenet Classification with Deep Convolutional Neural Networks.” In Advances in Neural Information Processing Systems, 1097–1105.

“Profvis.” 2018. https://rstudio.github.io/profvis/.

“RStudio Profiler.” 2018. https://support.rstudio.com/hc/en-us/articles/218221837-Profiling-with-RStudio.